In today's fast-paced digital age, the flood of online news has made it increasingly challenging to stay informed without being overwhelmed. At MediaX, we're proud to unveil groundbreaking research that transforms how Vietnamese news is consumed. Led by Nguyễn Đình Tuấn, our research team has developed a novel approach to news summarization that harnesses the power of the BERT model to generate concise, informative summaries of Vietnamese news articles.

Addressing the Challenge of Vietnamese News Summarization

Vietnamese, with its intricate syntax and rich morphology, poses unique challenges for automatic text summarization. Traditional summarization methods often struggle to produce coherent summaries that capture the nuanced meanings of original articles. This challenge motivated us to seek a solution that understands the complexities of the Vietnamese language while preserving the essence of the original content.

Introducing the BLLA Model: A Novel Approach to Summarization

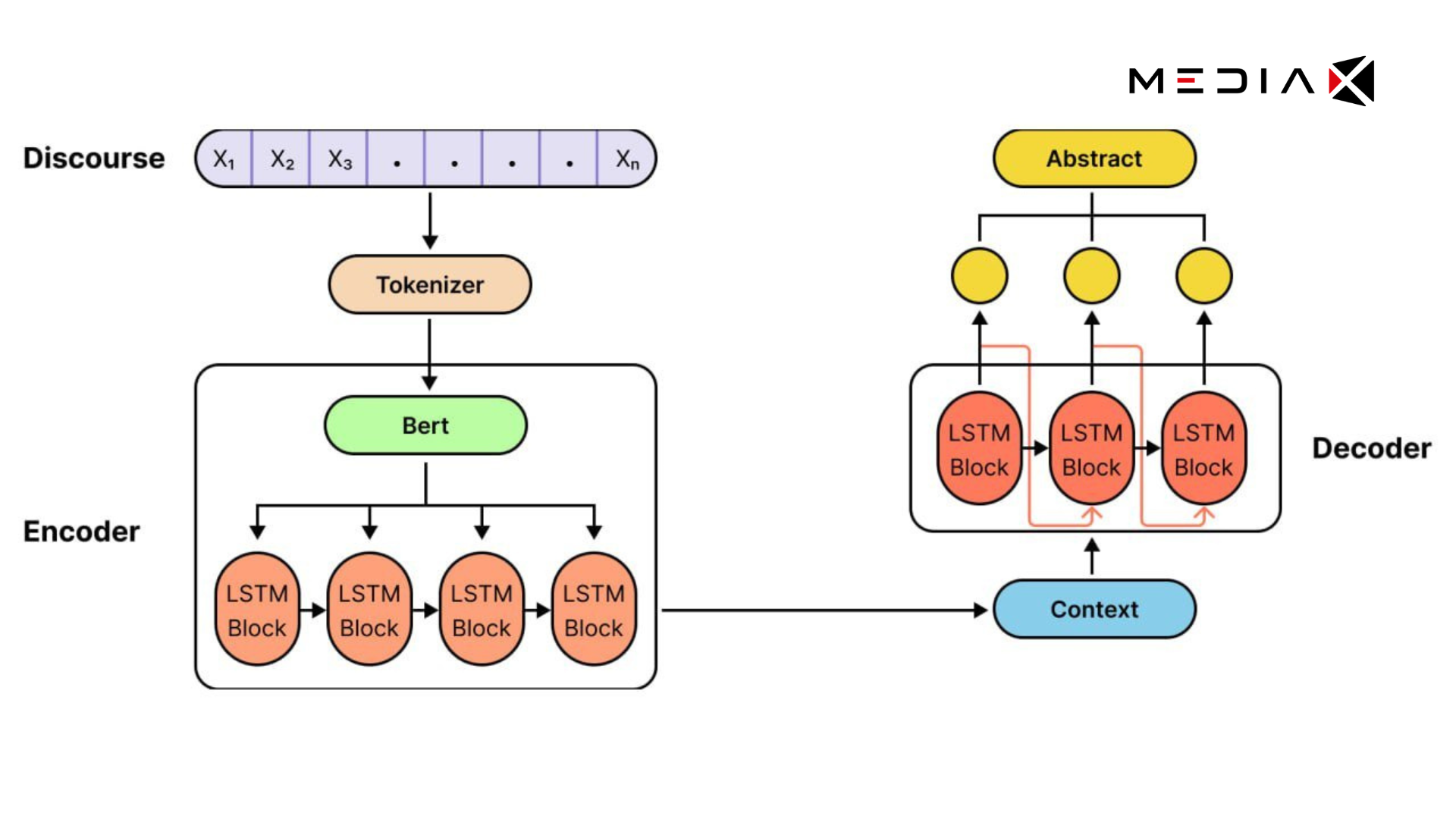

Our research culminated in the development of the BERT-LSTM-LSTM with Attention (BLLA) model. This innovative model combines BERT's deep contextual understanding with LSTM networks' sequential processing capabilities, further enhanced by an attention mechanism. This blend allows for the effective summarization of Vietnamese news articles, producing summaries that are both accurate and coherent.

- Deep Contextual Understanding with BERT: The BLLA model leverages BERT to deeply understand the context of Vietnamese text, enabling it to grasp subtle meanings within news articles.

- Sequential Processing with LSTM: Building on BERT's insights, the LSTM layers handle the sequence-to-sequence aspects of summarization, ensuring the generated summaries reflect the original articles' intent.

- Focused Summarization with Attention Mechanism: The attention layer allows the model to focus on the most relevant parts of the news articles, ensuring the summaries are both concise and comprehensive.

Crafting the Perfect Dataset

Key to our research was the creation of two distinct datasets tailored to train the BLLA model under different complexity levels:

- The Simplified Dataset: Featuring pairs of titles and three sentences from news articles, this dataset focuses on summarizing the core message straightforwardly.

- The Complex Dataset: Consisting of summaries created from ten sentences of the articles, initially generated by the GPT API and refined by human editors, this dataset trains the BLLA model to handle more detailed narratives.

These datasets were instrumental in training our model to navigate the intricacies of Vietnamese news summarization, from basic tasks to more complex challenges.

Breakthrough Results

The BLLA model demonstrated remarkable success in summarizing Vietnamese news articles. Utilizing the phoBERT version of the model, it achieved impressive BLEU scores, surpassing both the BERT base and traditional LSTM models across all n-gram levels. Specifically, the BLLA-phoBERT variant achieved BLEU-1 through BLEU-4 scores of 68.08, 58.53, 50.06, and 41.89, respectively, showcasing its superior ability to generate accurate and coherent summaries compared to its counterparts.

This performance not only underscores the model's potential to significantly enhance how news is consumed and disseminated in the Vietnamese language but also establishes a new benchmark for AI-driven text summarization.

Looking Forward

This research marks a significant advancement in our understanding and application of AI in language processing. At MediaX, we are committed to continuing our exploration of AI technologies to create solutions that make information more accessible and engaging for everyone.

Stay tuned for further updates as we refine our models and explore new frontiers in natural language processing and beyond.